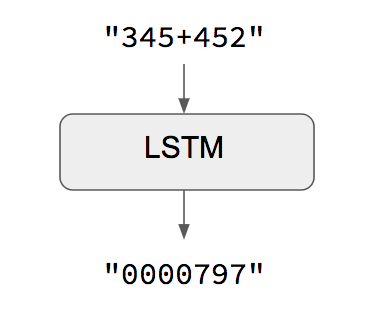

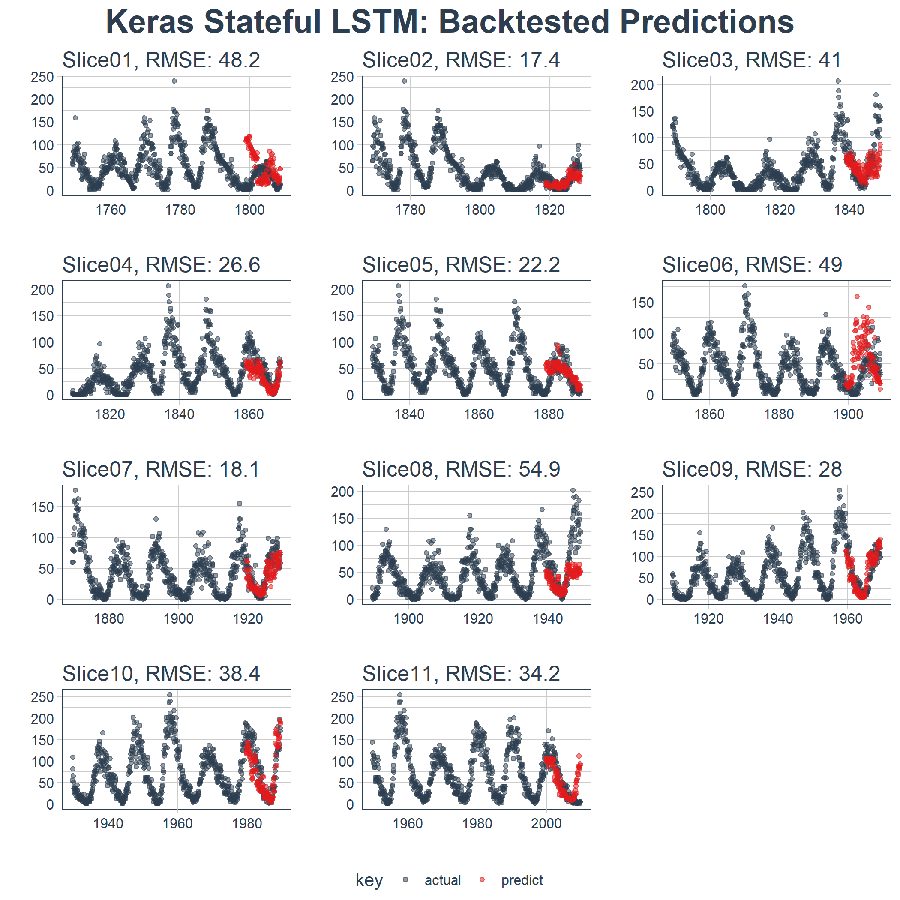

Does this encoder-decoder LSTM make sense for time series sequence to sequence? - Data Science Stack Exchange

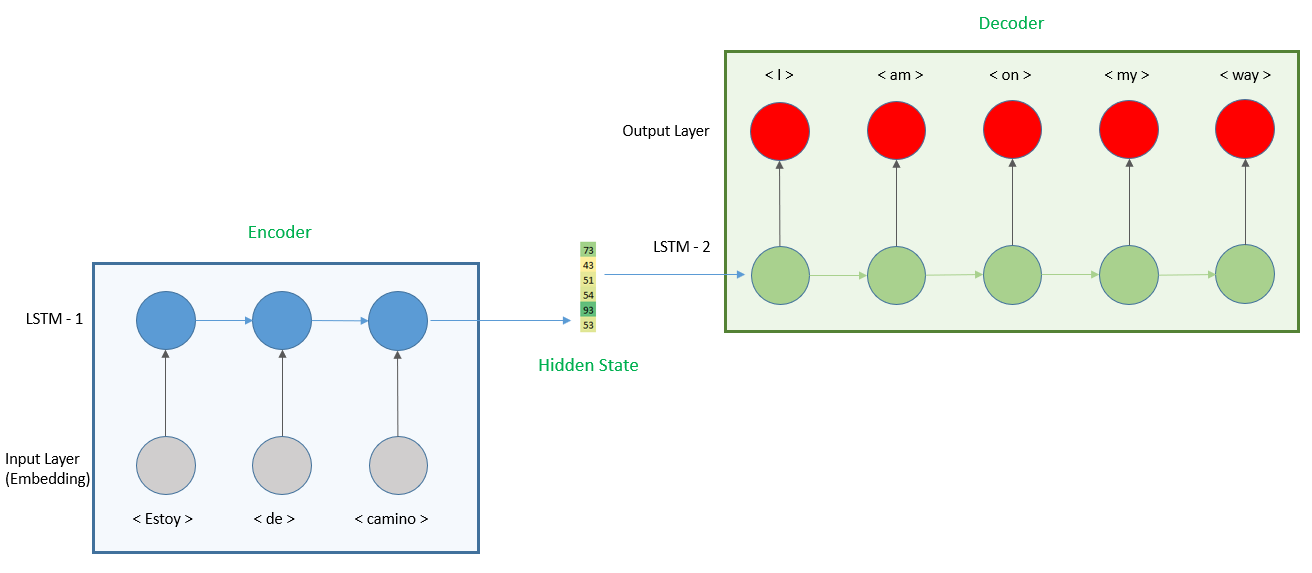

tensorflow - How to connect LSTM layers in Keras, RepeatVector or return_sequence=True? - Stack Overflow

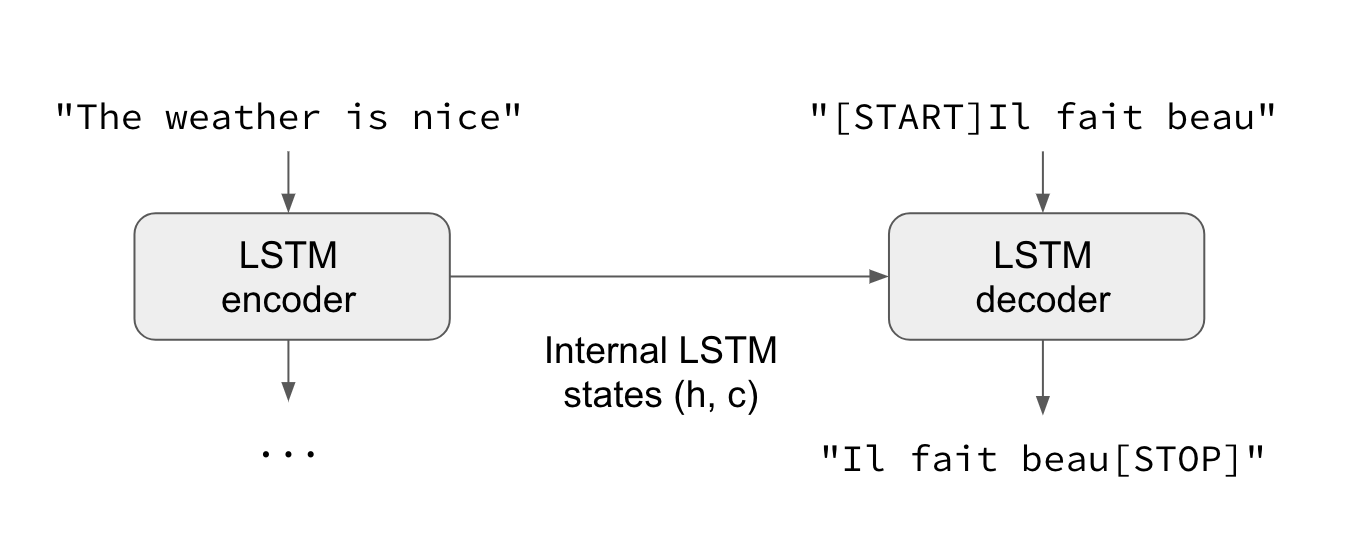

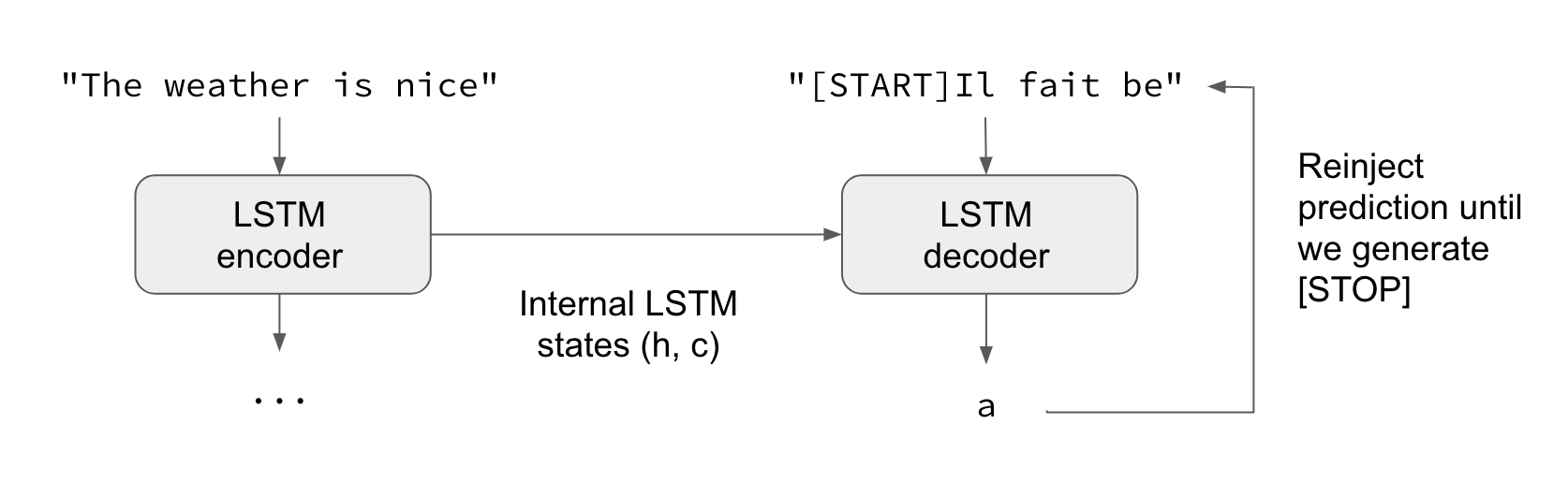

What is attention mechanism?. Evolution of the techniques to solve… | by Nechu BM | Towards Data Science

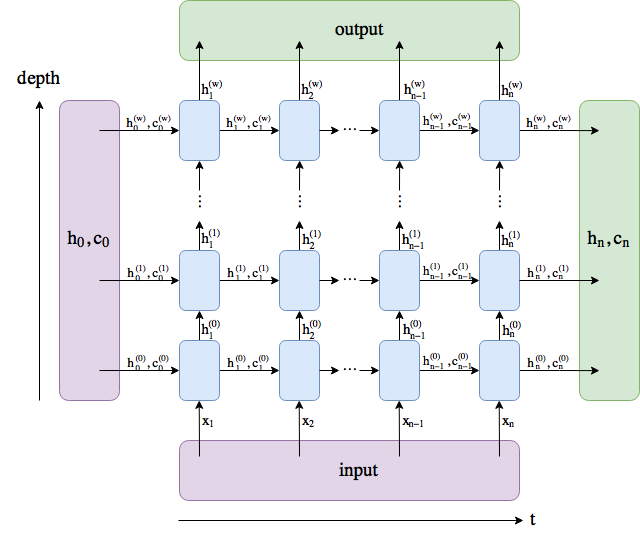

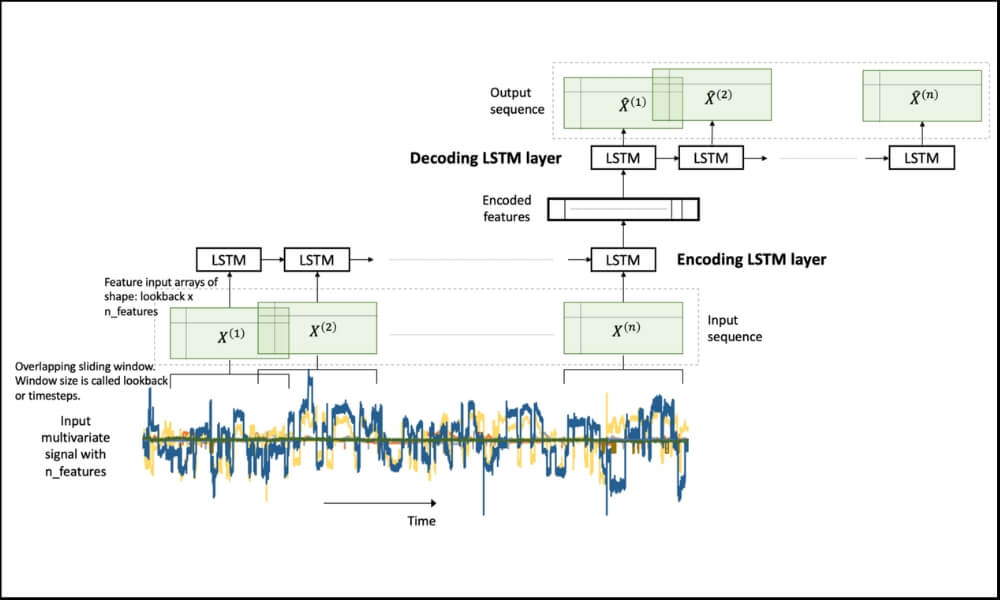

RNN-LSTM structure for sequential learning. Two hidden RNN-LSTM layers... | Download Scientific Diagram

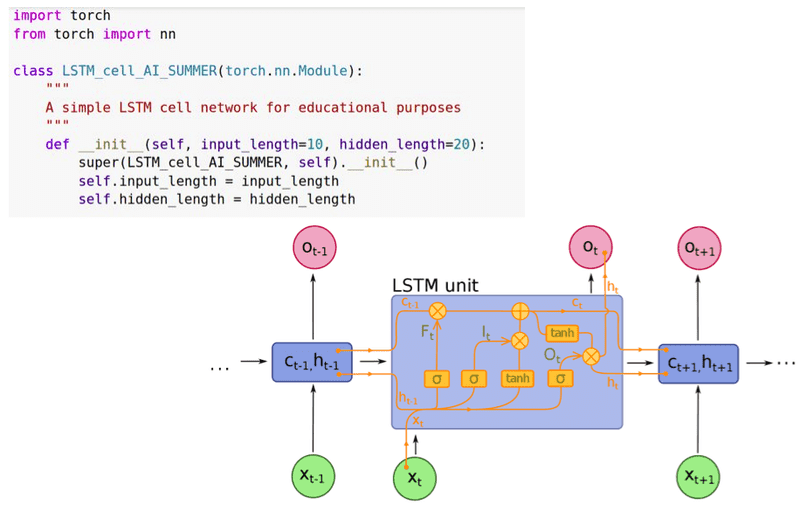

Dissecting The Role of Return_state and Return_seq Options in LSTM Based Sequence Models | by Suresh Pasumarthi | Medium