Real-Time Natural Language Processing with BERT Using NVIDIA TensorRT (Updated) | NVIDIA Technical Blog

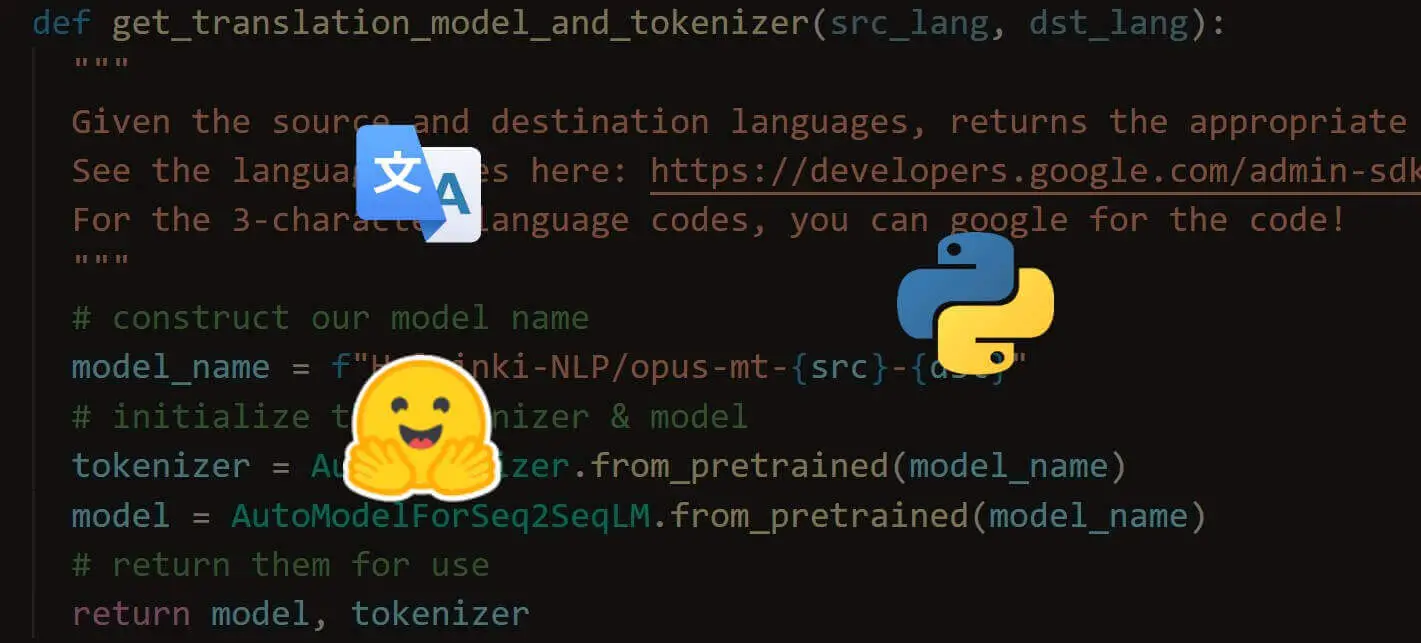

Sentiment Analysis with BERT and Transformers by Hugging Face using PyTorch and Python | Curiousily - Hacker's Guide to Machine Learning

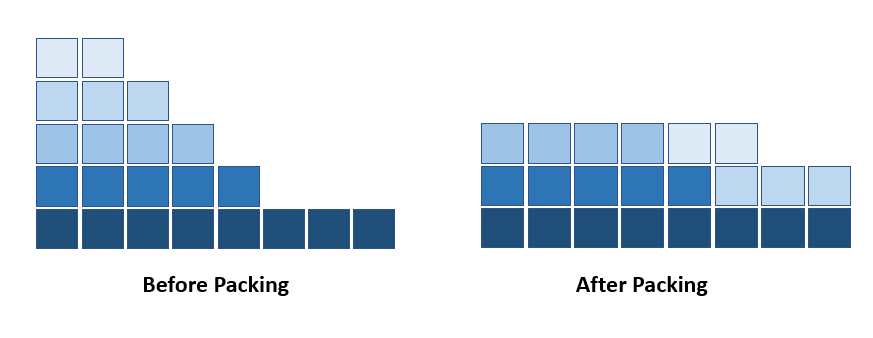

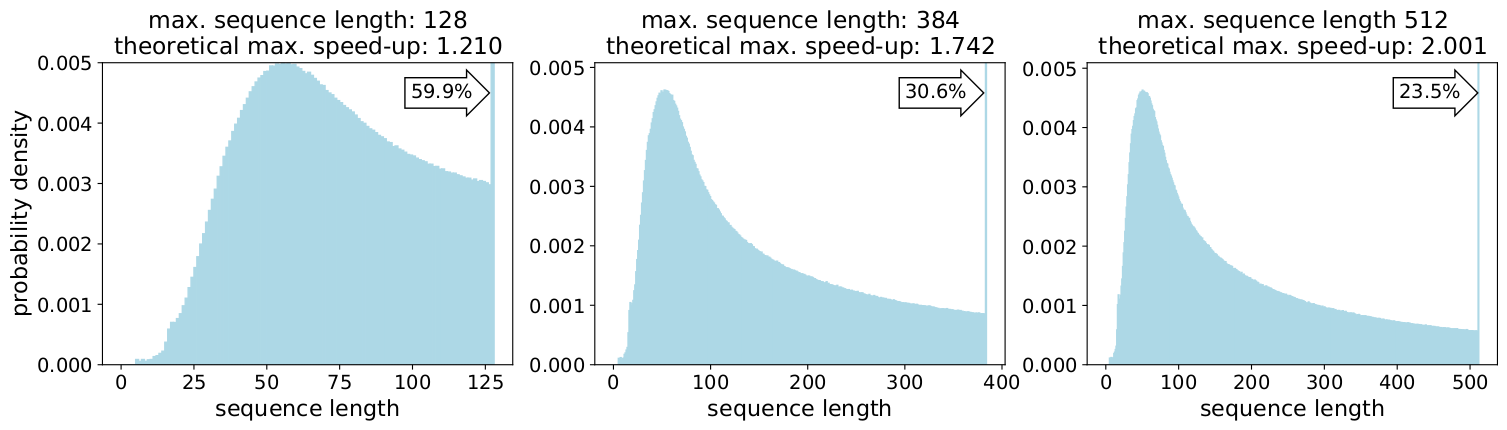

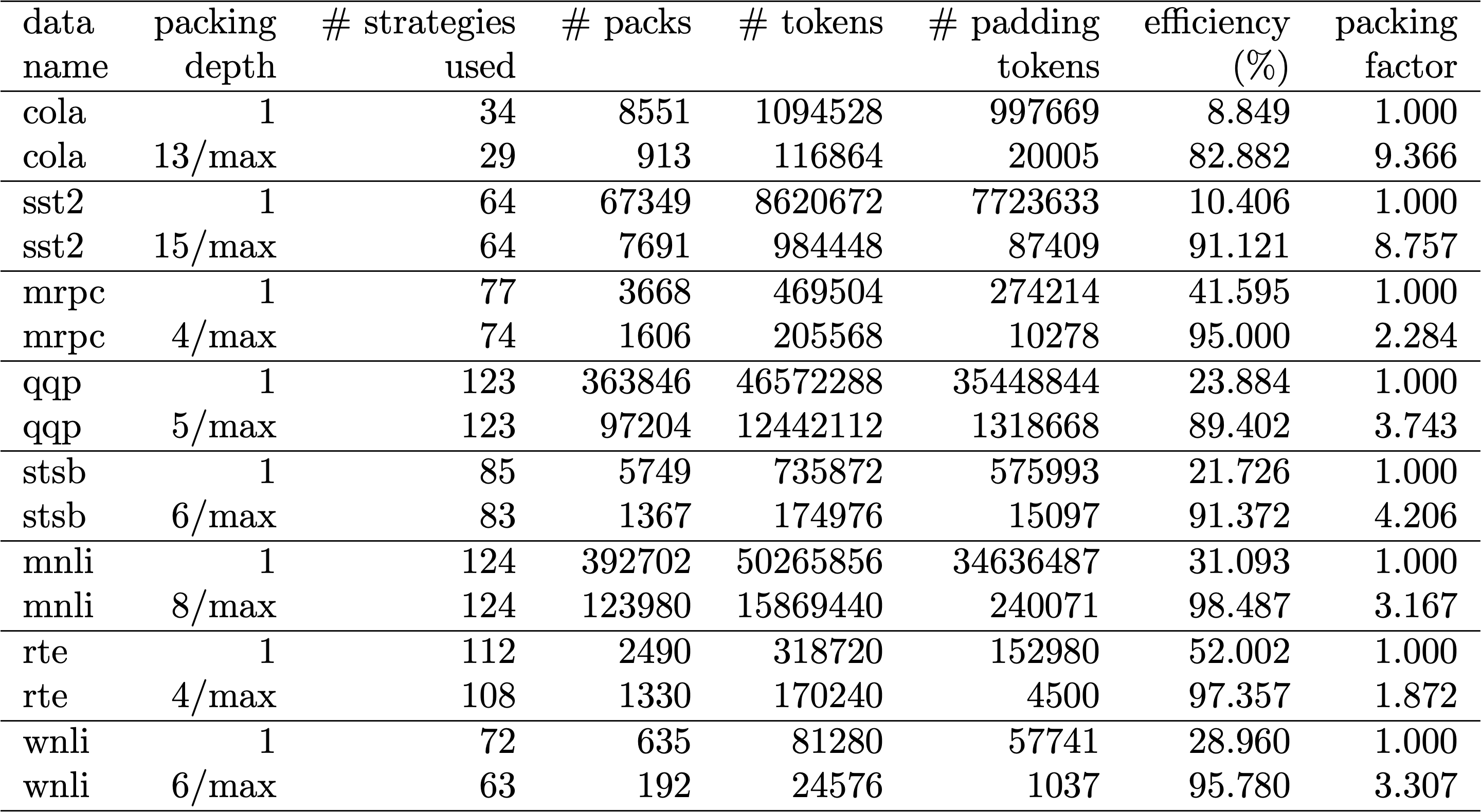

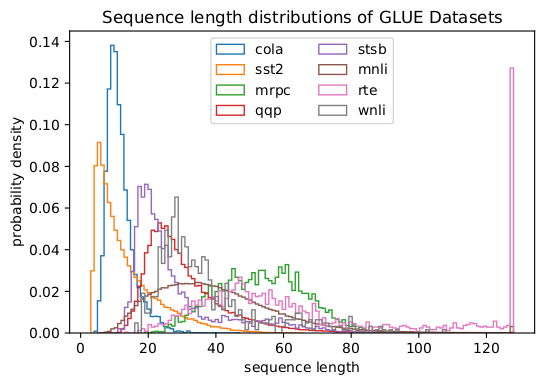

Introducing Packed BERT for 2x Training Speed-up in Natural Language Processing | by Dr. Mario Michael Krell | Towards Data Science

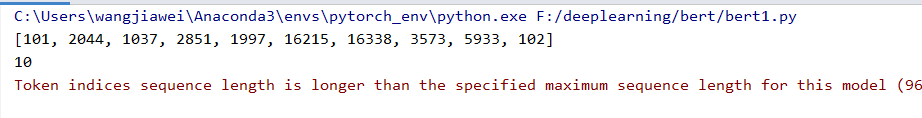

deep learning - Why do BERT classification do worse with longer sequence length? - Data Science Stack Exchange

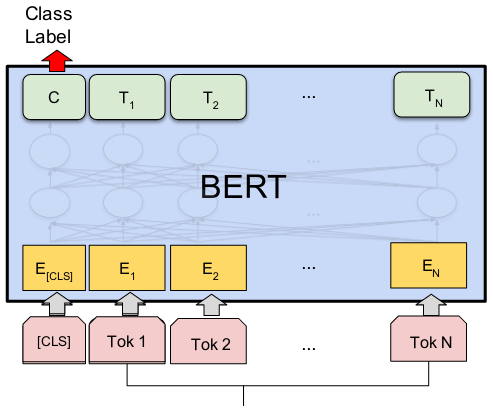

3: A visualisation of how inputs are passed through BERT with overlap... | Download Scientific Diagram

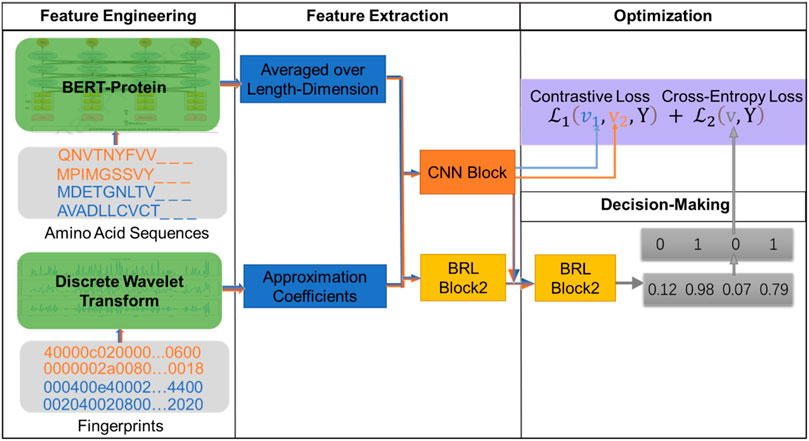

Frontiers | DTI-BERT: Identifying Drug-Target Interactions in Cellular Networking Based on BERT and Deep Learning Method

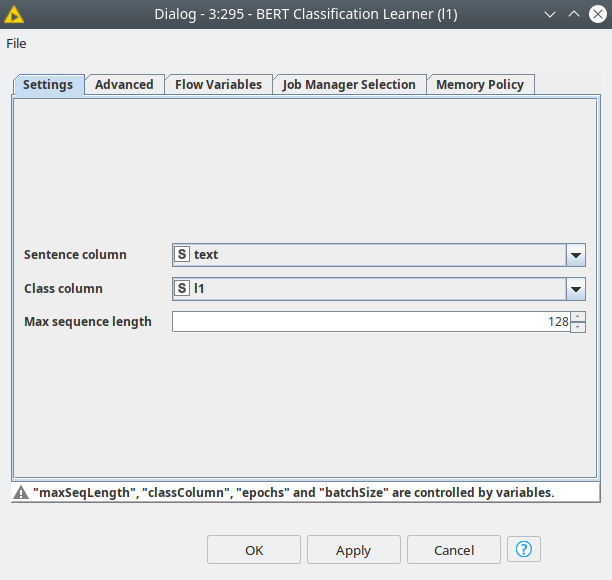

Multi-label Text Classification using BERT – The Mighty Transformer | by Kaushal Trivedi | HuggingFace | Medium